Insights

INSIGHTS

All Topics

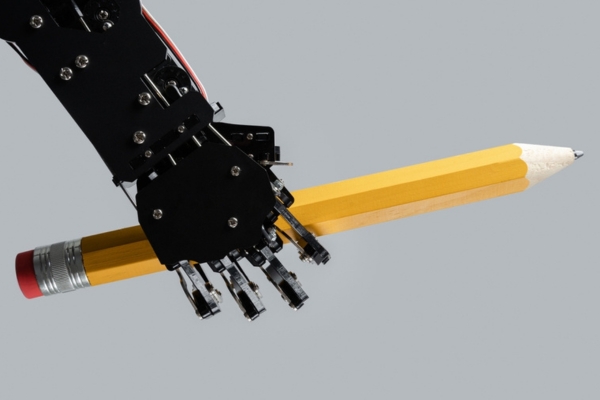

The risks of using content bots

21 Sep 2023by Elaine Taylor

You have viewed all of your 1 articles as an unregistered user

To continue reading this article please register.

For unlimited access to our free content, please register.

Elaine Taylor

More on this topic

Related Content

Recommended Products

Related Videos

23 Apr 2025by Laura Stanley

What does customer experience mean for charities in 2025?Sponsored Article

Our Events

Charity Digital Academy

Our courses aim, in just three hours, to enhance soft skills and hard skills, boost your knowledge of finance and artificial intelligence, and supercharge your digital capabilities. Check out some of the incredible options by clicking here.